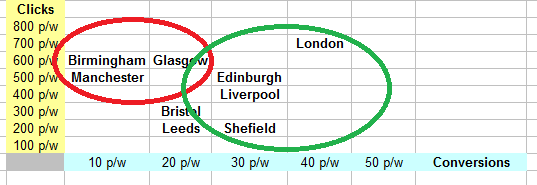

The concept of elasticity is used to measure the degree of market response to price changes (price sensitivity).

- Elasticity is a measure of demand responsiveness.

- Can be computed for a range of prices and quantities.

Definition of elasticity:

Percentage change in sales volume divided by the percentage change in sales. [Read more…]